Release-Level Retrospectives: Stepping up Your Game

From 2009 to 2012 I worked as the Director Software Development and Lead Agile Coach at a company called iContact. If you’ve followed my writing, I’ve used my experiences there as a backdrop for many of my lessons learned. This is another one of those learnings, and if I may say so, I think it’s one of the most powerful.

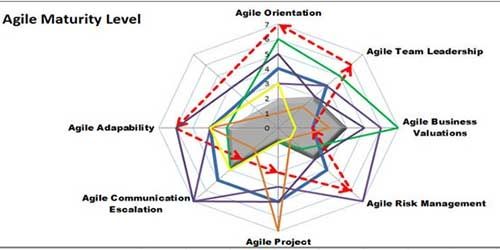

Very early on we adopted a Release Train model where we had a sprint tempo of 2-week sprints and quarterly releases. We would “commit to” a release schedule about a year in advance and share that with our customers. I modeled it after Dean Leffingwell’s early writings about Release Trains, which was significantly before he introduced SAFe.

In fact, I’ve been using Scrum of Scrums and Release Trains in my coaching and for personal use since about 2005 or so.

Release Retrospective

One of the things we stumbled up early on was having a release level retrospective at the end of the train. Yes, we would do retros for each sprint. And they would always focus on “connecting the dots” from the first to last sprint as we built our stories and features.

But we also started doing a release retrospective as a way of sharing release feedback across the entire organization. The retrospective went beyond release specifics and became a way to gather feedback on all aspects of our agile delivery approaches from virtually the entire company.

Related Article: Agile Chartering – Agile Chartering – Beginning With The End in Mind

We found that these retrospectives were instrumental in the success of each of our releases. They also helped us in transforming our entire organization to more agile thinking and behavior.

To illustrate the latter point, I’ll share a story about our VP of Sales.

Kevin Fitzgerald Story

Kevin was our Vice President of Sales at iContact. He and I had worked together at a previous company too, so we were fairly comfortable with each other. Kevin is a great leader when it comes to building sales teams and he did just that at iContact.

As part of his role, Kevin was very driven to build cross-functional relationships from sales throughout the organization. One of the ways he did that was to support the “transparency” of our Agile / Scrum methods. He encouraged his various teams to attend and engage in our sprint reviews. He also engaged with our Scrum of Scrums. But one of the more important opportunities where he really provided great feedback and leadership was encouraging his teams to attend our release retrospectives.

I was always pleasantly surprised by the number of sales folks who attended the retrospectives and with their level of engagement and feedback.

But the real reason I’m sharing this story is about something that Kevin shared with me on many occasions. Early on he pulled me aside after a release retrospective and gave our teams some high praise. He said –

Bob, I wish my teams were as transparent and mature as yours. Not only do your teams work hard, but they also make everything they do completely transparent. That includes their successes, efforts, and even their failures. But the thing that impresses me most is their ability to deal with any and all constructive feedback.

I listen to the feedback your teams typically get in the review and some of it is quite harsh. But they maturely take the good and the bad and they take action on it. It’s an incredible example of how they are all about continuous improvement and results.

To be honest, I often challenge my teams to be “like yours”. Keep up the great work my friend.

And the real point to this story is the example that our release retrospectives made for the entire organization.

Over time, iContact began to transform as an Agile organization. One of the critical practices that I attribute that transformation to was our whole company, release retrospectives.

Another Perspective

Mark Riedeman was a Software Manager that facilitated our release retrospectives for probably 2 years at iContact. Mark was a great facilitator, but also was very personable and comfortable in front of large crowds.

Over time, he and I brainstormed our strategies and adjustments. One of the key trends was the overall company level of engagement in the process as it steadily increased.

Here are some of Mark’s recollections in his own words:

Mark Riedeman’s perspective

Here’s what my recollections are from running the Release Retrospectives. I think they’re one of the most underutilized tools in Agile development. They can really be difficult to manage, delicate to facilitate and cumbersome to organize. But they are completely worth it.

As you recall, I tried to run the first one like a big Sprint Retrospective, but with more people. That didn’t work out so well. In true agile fashion, I tried to improve every time and ended up learning a lot of sometimes-awkward lessons. I started with the basics, big Post-it pads for Good, Bad, and Try, tons of Post-it Notes and pens around the room, and a company-wide invite. Needless to say, it wasn’t the easiest thing to facilitate. Here’s how I ended up running them and reasons for some of the choices made:

At first, I put Post-it Notes and pens around the room and had people write things down. I read them all and decided where they went so we could all vote on them to come up with a prioritized list by the end of the hour. That didn’t scale so well and we didn’t finish anywhere close to on time.

As the attendance grew, I had to figure out ways to get more of the input done faster so that we would have time to talk about ways to improve…the real goal of the meeting. I think the iterations on that went something like this:

-

People don’t want to read their own written criticism out loud, so I collected all the cards, read them, and put them in a rough grouping on the boards and then did a final, pre-vote grouping. Took forever.

-

Instead of my running around the room, I then had other people run around and collect cards for me so I could read them, put them on the boards, etc.

-

Then, instead of my grouping them on the board, I’d just suggest a group theme to put them with, and then volunteers would organize them on the boards around the room.

-

When that just wouldn’t scale anymore, we had to go electronic too, and I solicited anonymous feedback via a Google Form, categorized and organized it into groups ahead of time and sent it all out the morning of the retrospective. That way, everyone could see what was already said and what topics Mark might have to bring up in front of the whole company. (That boosted attendance.) We still did about 10-15 minutes of written sticky additions as well.

Here are some of the other lessons learned along the way:

-

Facilitating this meeting is not for the faint of heart or the thin-skinned. You will inevitably have to manage discussions about internal company divisions and you become the spokesperson for the status of the company’s problems.

-

Because of that, I always thought it was important that the same person lead all of the meetings. No one wants a committee to hear to their complaints. They want a person who can be held accountable and the continuity seemed to really help.

-

In the meeting invite, I would send out all of the notes from the previous release highlighting the big items from that meeting. I would also highlight the progress that was made from the last retrospective, or lack thereof.

-

At the beginning of every meeting, I would start by reviewing the last Retrospective. In time, people start to notice the recurrence of the same kinds of problems. That’s the best value of the retrospectives. Not much can stay stuck under the rug forever and it forces people to start having difficult discussions about topics that really should be discussed.

-

After every meeting, I collected every Post-it Note and typed them up in a Release Retrospective wiki page and email. Even if there were almost word-for-word variations of notes, no one wants to think you editorialized their feedback out, and they know what they wrote.

-

Hand out Sharpies instead of pens with Post-its. You get more big-picture feedback when people have to use fewer words.

Running Really Big Retrospectives

In a nutshell release retrospectives are simply BIG retrospectives. They encompass a longer duration of time and a broader audience.

In our case at iContact, we invited the entire company. There is some artistry to running big retrospectives and Open Space techniques often can help with some of the dynamics. As can electronic tooling support pre and post retrospective.

Here is a link to other perspectives in running larger retrospectives – http://joakimsunden.com/2013/01/running-big-retrospectives-at-spotify/

And I’ll leave it to you to find your favorite Open Space guides to mine for hints about running effective retrospectives.

Wrapping Up

I would strongly encourage you to adopt retrospectives at various tempos across your agile team dynamics – sprint-ly, release-ly, quarterly, or whatever makes the most sense.

You’ll get various levels of feedback depending on whom you invite and how you frame the scope of the retrospective. But beyond the raw data, you’ll be exposing your firm to a fundamental agile practice, which just might influence your organization…by example.

Stay agile my friends,

Bob.

A few related articles:

· A nice article on the Scrum Alliance website about release retrospectives .

· A 5-part series posted on the VersionOne blog. Part-1 can be found here .